Why use XenForo for Forums?

Many big organizations and companies use forums to engage with their communities. Unlike popular social networks, a forum helps strengthen the community at a higher level. With forums, you get:

- More accurate data structuring.

- Means to use powerful tools to retrieve information.

- Ability to use advanced rating and gamification systems.

- Power to use moderation and anti-spam protection.

In this article, we’ll explain how to use the modern XenForo engine to deploy forums. So we’ll use caching based on Memcached and ElasticSearch because it’s a powerful search engine. These services will work inside Docker containers. Also, we’ll deploy and manage them via the Plesk interface.

In addition, we’ll talk about ways you can use Elastic Stack (ElasticSearch + Logstash + Kibana) to analyse data in the context of Plesk. This will come in handy when analysing search queries or server logs on the forum.

How to Deploy the XenForo Forum on Plesk

Adding a Database

- First, create a subscription for the domain forum.domain.tld in Plesk.

- Then, in the domain’s PHP Settings, select the latest available PHP version (at the time of writing: PHP 7.1.10).

- Go to File Manager. Delete all files and directories in the website’s httpdocs except for favicon.ico.

- Upload the .ZIP file containing the XenForo distribution (Example: xenforo_1.5.15a_332013BAC9_full.zip) to the httpdocs directory.

- Click “Extract Files” to unpack the .ZIP file. Then, select everything in the unpacked archive and click “Move” to transfer the .ZIP file contents to the httpdocs directory. You can delete the upload directory and the xenforo_1.5.15a_332013BAC9_full.zip file afterwards – you won’t need those anymore.

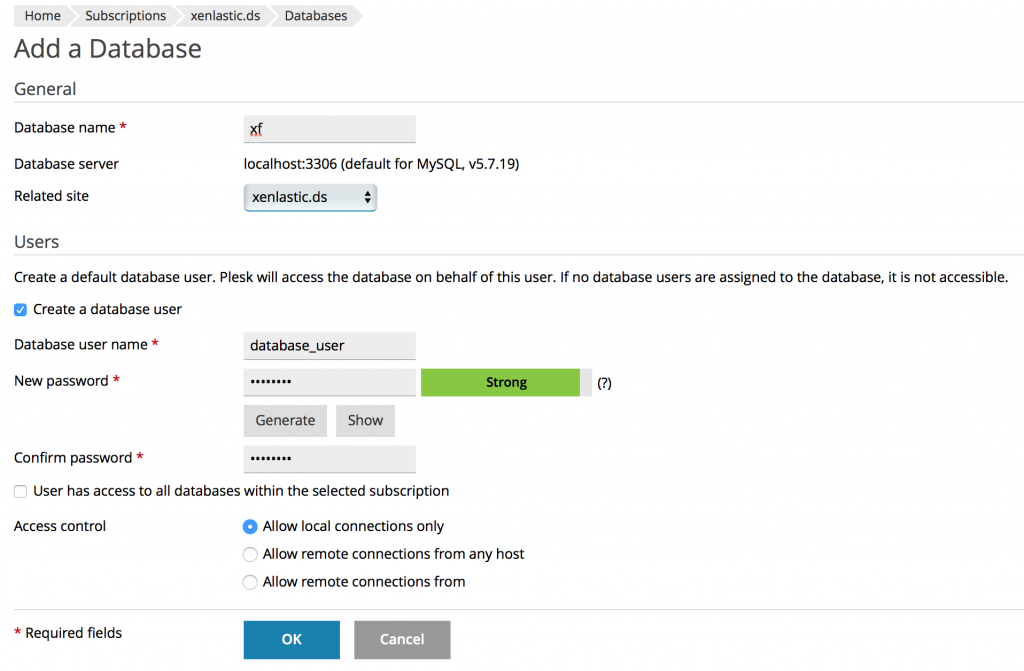

- In the forum.domain.tld subscription, go to Databases.

- Create a database for your future forum. You can choose any database name, username and password.

- And for security reasons, it’s important to set Access control to “Allow local connections only”. Here’s what it looks like:

Installing the Forum

- Go to forum.domain.tld. The XenForo installation menu will appear.

- Follow the on-screen instructions and provide the database name, username and password you set.

- Then, you need to create an administrative account for the forum. After you finish the installation, you can log into your forum’s administrative panel and add the finishing touches.

- Speed up your forum significantly by enabling memcached caching technology and using the corresponding container from your Plesk Docker extension. But before you install it, you need to install the memcached module for the version of PHP used by forum.domain.tld. Here’s how you compile the memcached PHP module on a Debian/Ubuntu Plesk server:

# apt-get update && apt-get install gcc make autoconf libc-dev pkg-config plesk-php71-dev zlib1g-dev libmemcached-dev

Compile the memcached PHP module:

# cd /opt/plesk/php/7.1/include/php/ext

# wget -O phpmemcached-php7.zip https://github.com/php-memcached-dev/php-memcached/archive/php7.zip

# unzip phpmemcached-php7.zip

# cd php-memcached-php7/

# /opt/plesk/php/7.1/bin/phpize

# ./configure –with-php-config=/opt/plesk/php/7.1/bin/php-config

# export CFLAGS=”-march=native -O2 -fomit-frame-pointer -pipe”

# make

# make install

Install the compiled module:

# ls -la /opt/plesk/php/7.1/lib/php/modules/

# echo “extension=memcached.so” >/opt/plesk/php/7.1/etc/php.d/memcached.ini

# plesk bin php_handler –reread

# service plesk-php71-fpm restart

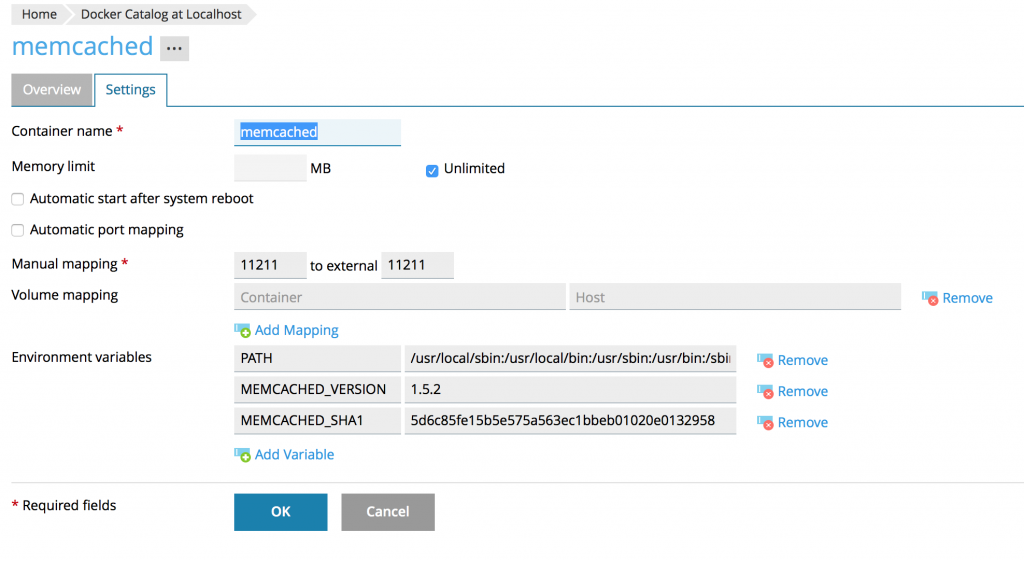

Run Memcached Docker

- Start by opening the Plesk Docker extension. Then, find the memcached Docker image in the catalog in order to install and run it. Here are the settings:

2. This should make port 11211 available on your Plesk server. So you can verify it using the following command:

# lsof -i tcp:11211

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

docker-pr 8479 root 4u IPv6 7238568 0t0 TCP *:11211 (LISTEN)

Enable Memcached caching for the forum

- Go to File Manager and open the file forum.domain.tld/httpdocs/library/config.php file in Code Editor.

- Add the following lines to the end of the file:

$config[‘cache’][‘enabled’] = true;

$config[‘cache’][‘frontend’] = ‘Core’;

$config[‘cache’][‘frontendOptions’][‘cache_id_prefix’] = ‘xf_’;

//Memcached

$config[‘cache’][‘backend’]=’Libmemcached’;

$config[‘cache’][‘backendOptions’]=array(

‘compression’=>false,

‘servers’ => array(

array(

‘host’=>’localhost’,

‘port’=>11211,

)

)

);

3. Also, make sure that your forum is working correctly. You can verify that caching is working with the following command:

# { echo “stats”; sleep 1; } | telnet localhost 11211 | grep “get_”

STAT get_hits 1126

STAT get_misses 37

STAT get_expired 0

STAT get_flushed 0

Add ElasticSearch search engine

You can improve your XenForo forum even further by adding to it the powerful ElasticSearch search engine.

- First of all, you need to install a XenForo plugin called XenForo Enhanced Search and the Docker container ElasticSearch.Note that the Docker container requires a significant amount of RAM to operate, so make sure that your server has enough memory. You can install the XenForo Enhanced Search plugin by downloading and extracting the .ZIP file via Plesk File Manager.

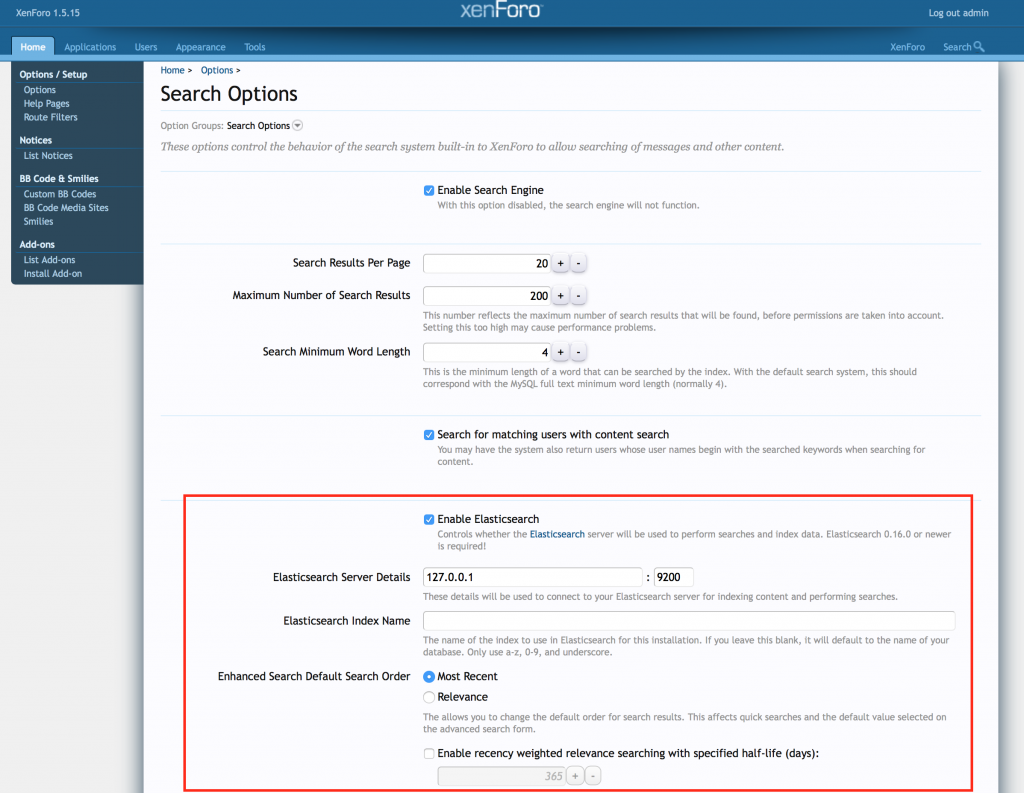

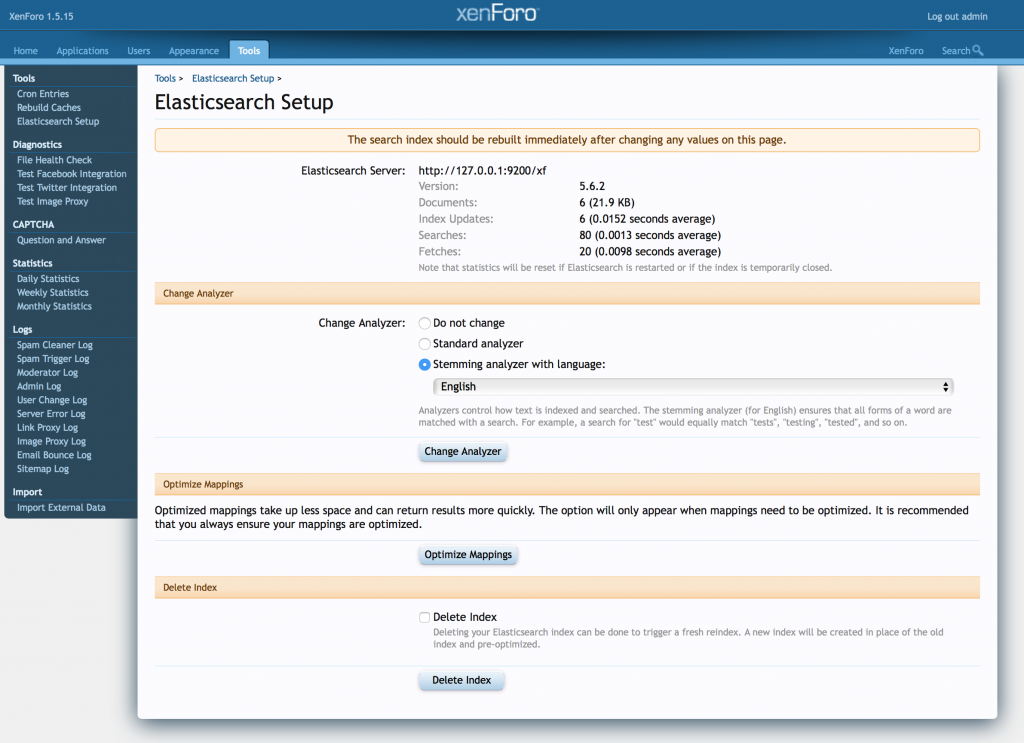

- Read the XenForo documentation to learn how to install XenForo plugins. Once you’re done, the ElasticSearch search engine settings should appear in the forum admin panel:

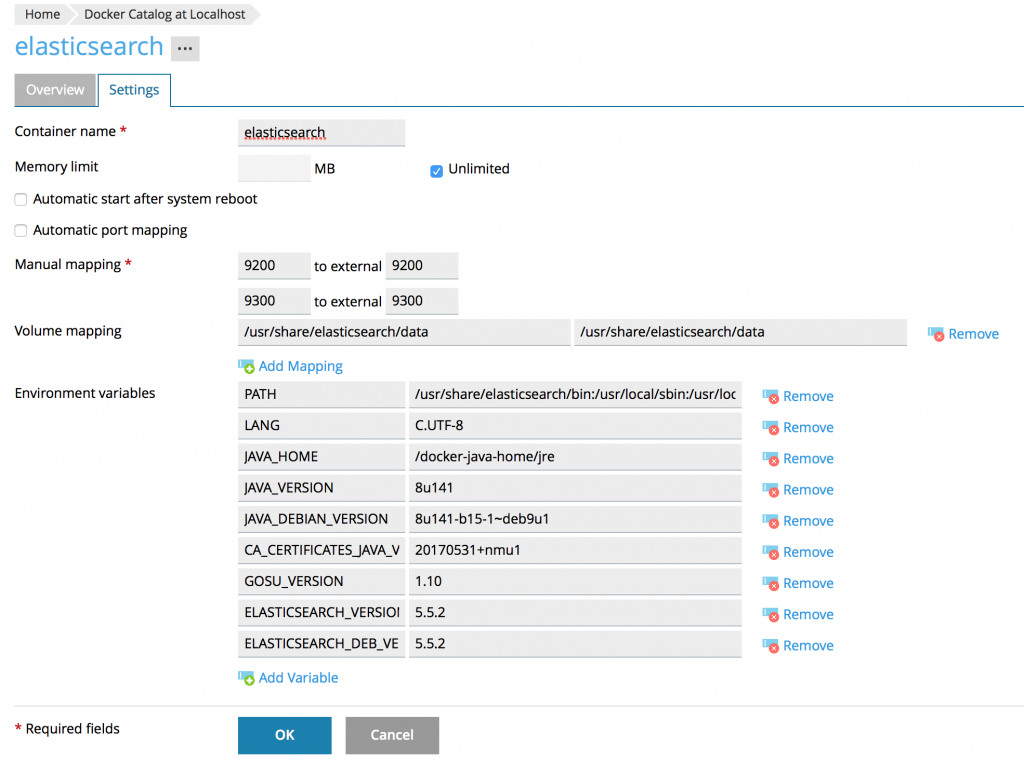

3. In order to get the search to work, you need to install the ElasticSearch Docker container in the Plesk Docker extension with the following settings:

4. Then, verify that port 9200 is open for connection using the following command:

# lsof -i tcp:9200

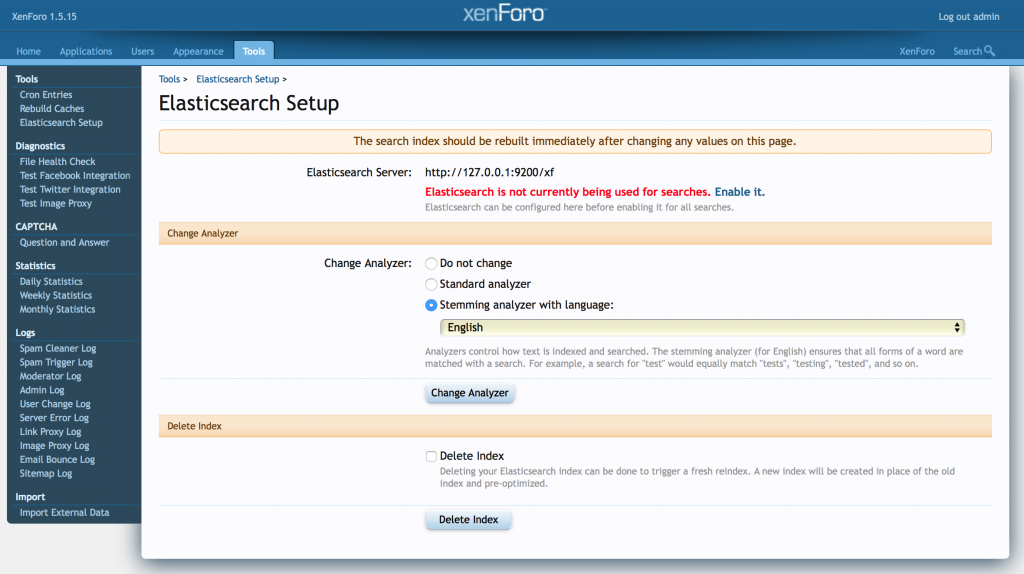

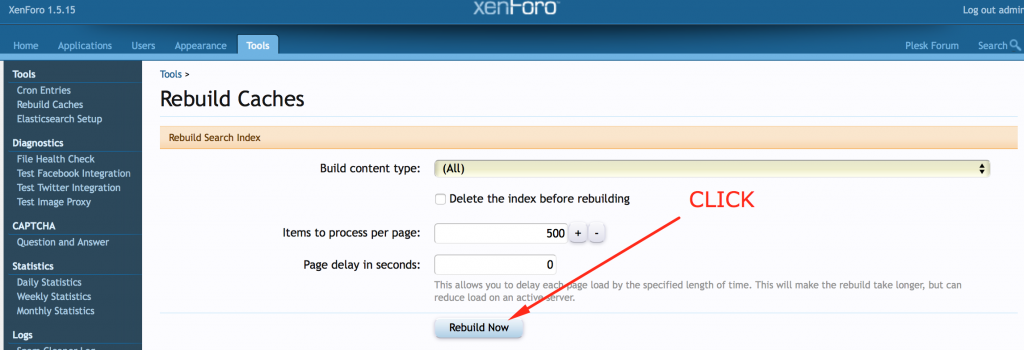

5. After that, you need to make sure that ElasticSearch is connected and create a Search Index in the forum administration panel:

Congratulations! You’ve done it. You’ve set up a forum based on the modern XenForo engine supplemented with a powerful search engine and accelerated caching based on Memcached.

Improve your XenForo Forum Further with Kibana

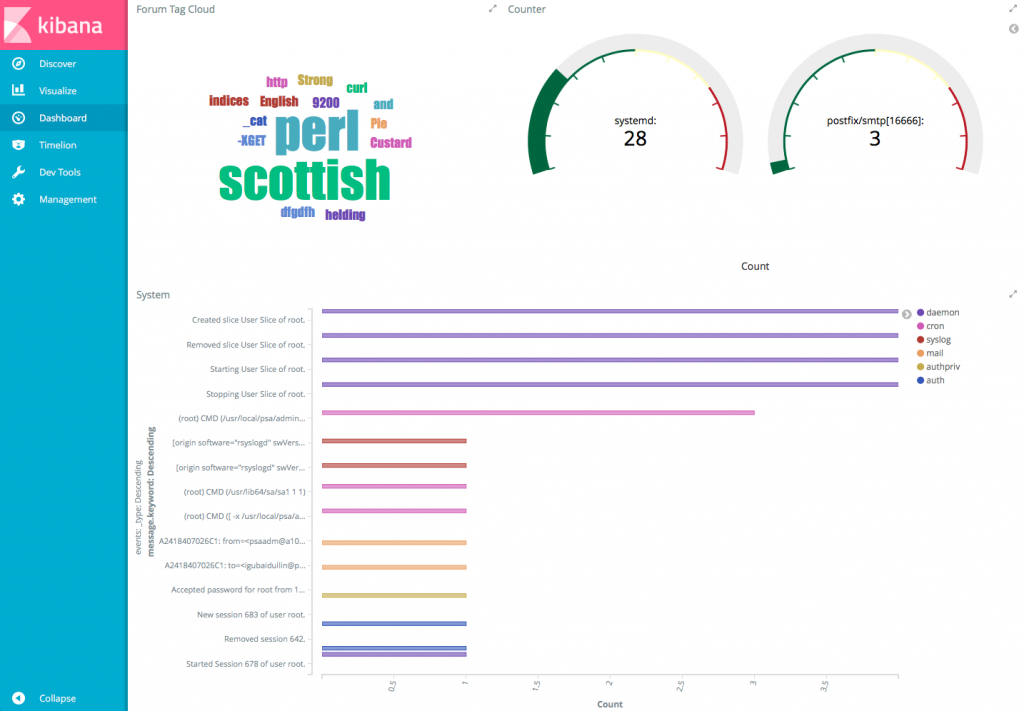

You can make your forum even better and add the ability to analyse forum search queries with Kibana. To do this, follow the steps below:

- You can either use a dedicated Kibana-Docker container or a combined Elasticsearch-Kibana-Docker container.

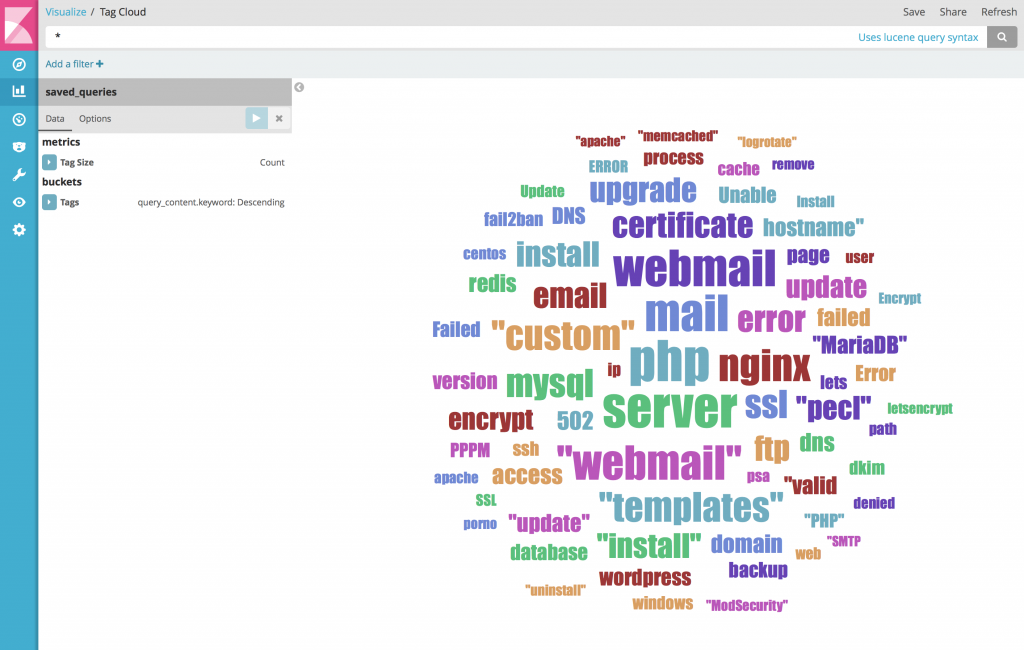

- You’ll also need to install a patch for the XenForo Enhanced Search plugin. This creates a separate ElasticSearch index that stores searches and can be analysed using Kibana. Here’s an example of Tag Cloud Statistics of keywords used in search queries:

Downloading the Patch File

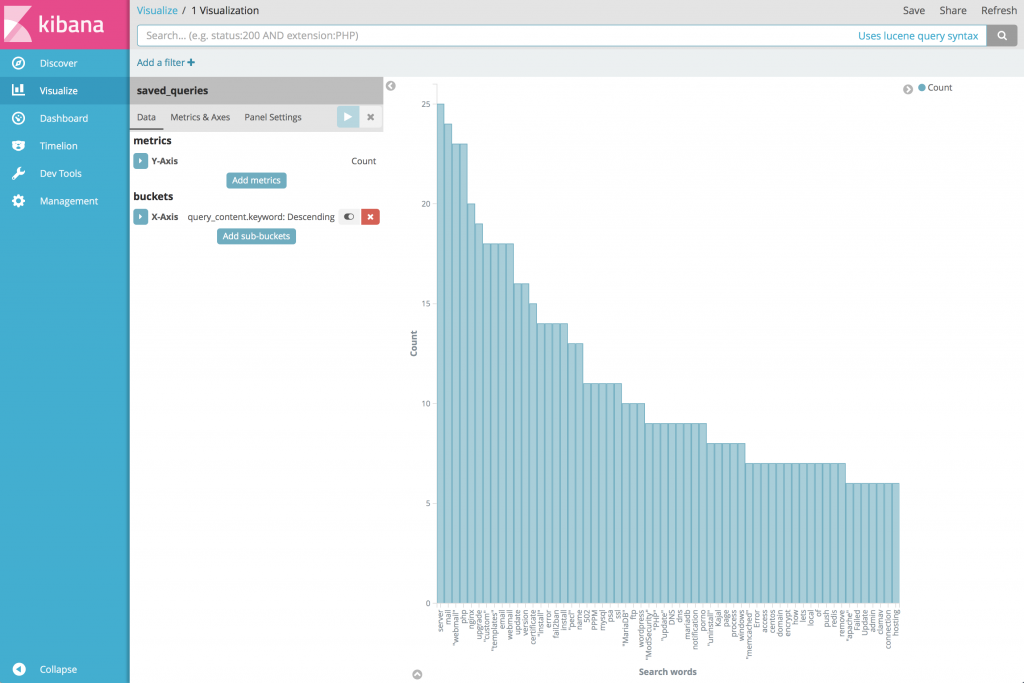

You can download the patched file for version 1.1.6 of the XenForo Enhanced Search plugin. Replace the original file found in httpdocs/library/XenES/Search/SourceHandler with the file you downloaded. In addition to the search index, ElasticSearch will create a separate index named saved_queries which will store search queries to be analysed by Kibana.

Another promising approach is to replace the standard web statistics components in Plesk (Awstats and Webalizer) with a powerful analysis system based on Kibana. There are two options for sending vhost logs to ElasticSearch:

- Using Logstash, another component of the Elastic Stack.

- Using the rsyslog service with the omelasticsearch.so plugin installed (yum install rsyslog-elasticsearch). This way, you can directly send log data to ElasticSearch. This is very cool, because you do not need an extra step like with Logstash.

Important Warning :

The logs must be in JSON format for ElasticSearch to store them properly and for Kibana to parse them. However, you can’t change the log_format nginx parameter on the vhost level.

Possible Solution:

Use the Filebeat service, which can take the regular log of nginx, Apache or another service, convert it into the required format (for example, JSON) and then pass it on. As an added benefit, this service lets you collect logs from different servers. There are many opportunities to experiment.

Using rsyslog, you can send any other system log to ElasticSearch to be analised with Kibana – and it’s quite workable. For example, here’s a rsyslog configuration /etc/rsyslog.d/syslogs.conf for sending your local syslog to Elasticsearch in a Logstash/Kibana-friendly way after running the rsyslog service with the command rsyslogd -dn:

# for listening to /dev/log

module(load=”omelasticsearch”) # for outputting to Elasticsearch

# this is for index names to be like: logstash-YYYY.MM.DD

template(name=”logstash-index”

type=”list”) {

constant(value=”logstash-“)

property(name=”timereported” dateFormat=”rfc3339″ position.from=”1″ position.to=”4″)

constant(value=”.”)

property(name=”timereported” dateFormat=”rfc3339″ position.from=”6″ position.to=”7″)

constant(value=”.”)

property(name=”timereported” dateFormat=”rfc3339″ position.from=”9″ position.to=”10″)

}

# this is for formatting our syslog in JSON with @timestamp

template(name=”plain-syslog”

type=”list”) {

constant(value=”{“)

constant(value=”\”@timestamp\”:\””) property(name=”timereported” dateFormat=”rfc3339″)

constant(value=”\”,\”host\”:\””) property(name=”hostname”)

constant(value=”\”,\”severity\”:\””) property(name=”syslogseverity-text”)

constant(value=”\”,\”facility\”:\””) property(name=”syslogfacility-text”)

constant(value=”\”,\”tag\”:\””) property(name=”syslogtag” format=”json”)

constant(value=”\”,\”message\”:\””) property(name=”msg” format=”json”)

constant(value=”\”}”)

}

# this is where we actually send the logs to Elasticsearch (localhost:9200 by default)

action(type=”omelasticsearch”

template=”plain-syslog”

searchIndex=”logstash-index”

dynSearchIndex=”on”

bulkmode=”on” # use the bulk API

action.resumeretrycount=”-1″ # retry indefinitely if Logsene/Elasticsearch is unreachable

)

You can see that ElasticSearch index logstash-2017.10.10 was successfully created and is ready for Kibana analysis:

# curl -XGET http://localhost:9200/_cat/indices?v

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

yellow open .kibana TYNVVyktQSuH-oiVO59WKA 1 1 4 0 15.8kb 15.8kb

yellow open xf JGCp9D_WSGeuOISV9EPy2g 5 1 6 0 21.8kb 21.8kb

yellow open logstash-2017.10.10 NKFmuog8Si6erk_vFmKNqQ 5 1 9 0 46kb 46kb

yellow open saved_queries GkykvFzxTiWvST53ZzunfA 5 1 16 0 43.7kb 43.7kb

You can create a Kibana Dashboard with a custom visualization showing the desired data, like this:

Your community on the XenForo platform

So you can now set up a modern platform for working with the community with the additional ability to collect and analyse all kinds of statistical data.

Of course, this article is not meant to be a comprehensive, “one stop shop” guide. It does not cover many important aspects, like security, for example. Think of this as a gentle nudge meant to spur your curiosity and describe possible scenarios and ways of implementing them. Experienced administrators can configure more advanced settings by themselves.

In conclusion, think of the Elastic Stack as of a tool or a construction set you can use to get a result according to your own liking. Just make sure to feed it the correct data you want to work with.

7 Comments

Hello Igor: your posts on Plesk’s blog using Docker are very good. You think it would be possible to do a post explaining how to use ffmpeg using docker. I think it would be a very used resource. The tutorials on the internet are very bad and confuse a lot. I think it would even be a good idea for plesk to implement this resource easily for users, using docker or a plesk extension.

Thanks for the idea. Perhaps I will find time for such instructions, after I study this question in detail.

Igor, nice work 🙂

Any ideas how to make same with Xenforo 2.0 and Enhanced Search 2.0?

This would be very nice to understand.

I have not investigated this question regarding XenForo 2.0 and Enhanced Search 2.0, but at first glance, it seems to me there are not so many differences. In general, this instruction can be an example, a direction in which all these things can be implemented for the new XenForo version.

a direction in which all these things can be implemented for the new XenForo version.

https://sarkariresult.onl/ , https://pnrstatus.vip/ & https://19216811.cam/

Hello, any chance you could provide the volume mapping and environment variables for elasticsearch v6?

Since elasticsearch 5 is EOL, I’d like to use v6.8.6 instead.

As far as I know, there is no need to make any special changes for ElasticSearch version 6.x.

Everything is the same there as for 5.x versions.