Docker is one of the most successful open source projects in recent history, it’s fundamentally shifting the way people think about building, shipping and running applications. If you’re in the tech industry then the chances you’re already aware of the project. We’re going to look at 6 key points about Docker.

According to Alex Ellis, Docker Captain, Containers are disruptive and are changing the way we build and partition our applications in the cloud. Gone are monolithic systems and in come microservices, auto-scaling and self-healing infrastructure. Forget heavy-weight SOAP interfaces – REST APIs are the new lingua franca.

Whether you are wondering how Docker fits into your stack or are already leading the way – here are 6 essential facts that you and your team need to know about containers.

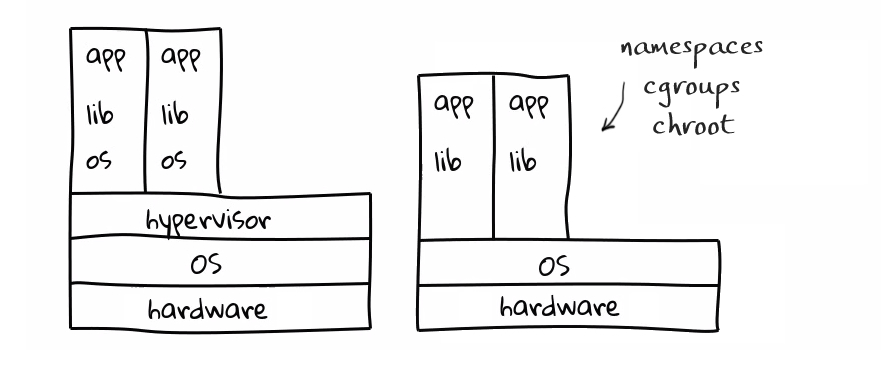

1. Containers are not VMs

Containers and virtual machines have similar resource isolation and allocation benefits – but a different architectural approach allows containers to be more portable and efficient. The main difference between containers and VMs is in their architectural approach.

Virtual machines

VMs include the application, the necessary binaries, libraries, and an entire guest operating system — all of which can amount to tens of GBs. VMs run on top of a physical machine using a Hypervisor. The hypervisors themselves run on physical computers, referred to as the “host machine”. The host machine is what provides the VM with resources, including RAM and CPU. These resources are divided among VMs. So if one VM is running a more resource heavy application, more resources would be allocated to that one than to the other VMs running on the same host machine.

The VM that is running on the host machine is also often called a “guest machine.”

This guest machine contains both the application and whatever it needs to run that application (e.g. system binaries, libraries). It also carries an entire virtualized hardware stack of its own, including virtualized network adapters, storage, and CPU — which means it in turn has its own full-fledged guest operating system. From the inside, the guest machine behaves as its own unit with its own dedicated resources. From the outside, we know that it’s a VM — sharing resources provided by the host machine.

Containers

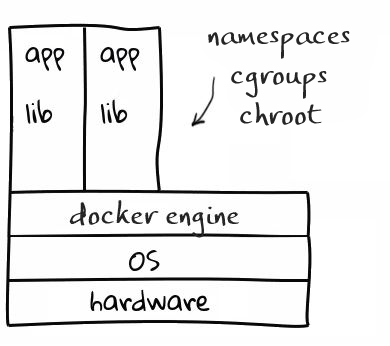

For all intents and purposes, containers look like a VM. The *key* is that the underlying architecture is fundamentally different between the containers and virtual machines. The big difference between containers and VMs is that containers *share* the host system’s kernel with other containers. The image above shows that containers package up just the user space, and not the kernel or virtual hardware like a VM does.

Each container gets its own isolated user space to allow multiple containers to run on a single host machine. All the operating system level architecture is being shared across containers.

The only parts that are created from scratch are the bins and libs – this is what makes containers so lightweight and portable. Virtual machines are built in the opposite direction. They start with a full operating system and, depending on the application, developers may or may not be able to strip out unwanted components.

- Basically containers provide same functionality which provides by VMs, with out any hypervisor overhead

- Containers are more light weight than VMs, since it shares kernel with host without hardware emulation (hypervisor)

- Docker is not a virtualization technology, it’s an application delivery technology.

- A container is “just” a process – literally a container is not “a thing”.

- Containers use kernel features such as kernel namespaces, and control groups (cgroups)

- Kernel namespaces provide basic isolation and CGroups use for resource allocation

Namespaces

- Kernel namespaces provide basic isolation

- It guarantee that each container cannot see or affect other containers

- For an example, with namespaces you can have multiple processes with same PID in different environments (containers)

- There are six types of namespaces available

- pid (processes)

- net (network interfaces, routing…)

- ipc (System V IPC)

- mnt (mount points, filesystems)

- uts (hostname)

- user (UIDs)

CGroups

- CGroups(Control Groups) allocate resources and apply limits to the resources a process can take (memory, CPU, disk I/O)

between containers - It ensure that each container gets its fair share of memory, CPU, disk I/O(resources),

- Also It guarantee that single container not over consuming the resources

2. A Container (Process) can start up in one-twentieth of a second

Containers can be created much faster than virtual machines because VMs must retrieve 10-20 GBs of an operating system from storage. The workload in the container uses the host server’s operating system kernel, avoiding that step. According to Miles Ward, Google Cloud Platform’s Global Head of Solutions, a container (process) can start up in ~1/20th of a second compared to a minute or so for a modern VM. When development teams adopt Docker – they add a new layer of agility, and productivitiy to the software development lifecycle.

Image: Plesk Onyx

Having that speed right in place allows a development team to get project code activated, to test code in different ways, or to launch additional e-commerce capacity on its website – all very quickly.

3. Containers have proven themselves on a massive scale

The world’s most innovative companies are adopting microservices architectures, where loosely coupled together services from applications. For example, you might have your Mongo database running in one container and your Redis server in another while your Node.js app is in another. With Docker, it’s become much more easier to link these containers together to create your application, making it easy-to-scale or update components independently in the future.

According to InformationWeek, another example is Google. Google Search is the world’s biggest implementer of containers, which the company uses for internal operations. In running Google Search operations, it uses containers by themselves, launching about 7,000 containers every second, which amounts to about 2 billion every week. The significance of containerization is that it is creating a standard definition and corresponding reference runtime that industry players will need to be able to move containers between different clouds (Google, AWS, Azure, DigitalOcean,…) which will allow applications and containers to become the portability layer going forward.

Docker helped create a group called the Open Container Initiative formed June 22nd 2015. The group exists to provide a standard format for container images and a specification for container runtimes. This helps avoid vendor lock-in and means your applications will be portable between many different cloud providers and hosts.

4. Containers are “lightweight”

As mentioned before, containers running on a single machine share the same operating system kernel – they start instantly and use less RAM. Docker for example has made it much easier for anyone — developers, sysadmins, and others — to take advantage of containers in order to quickly build and test portable applications. It allows anyone to package an application on their laptop, which in turn can run unmodified on any public cloud, private cloud, or even bare metal – the mantra is: “build once, run anywhere.”

5. Docker has become synonymous with containers

Docker is rapidly changing the rules of the cloud and upending the cloud technology landscape. Smoothing the way for microservices, open source collaboration, and DevOps. Docker is changing both the application development lifecycle and cloud engineering practices.

Stats:

- 2B+ Docker Image Downloads

- 2000+ contributors

- 40K+ GitHub stars

- 200K+ Dockerized apps

- 240 Meetups in 70 countries

- 95K Meetup members

Every day, lot’s of developers are happily testing or building new Docker-based apps with Plesk Onyx – understanding where the Docker fire is spreading is the key to staying competitive in an ever-changing world.

Web Professionals understood that containers would be much more useful and portable if there was one way of creating them and moving them around, instead of having a proliferation of container formatting engines. Docker, at the moment, is that de facto standard.

They’re just like shipping containers, as Docker’s CEO Ben Golub likes to say. Every trucking firm, railroad, and marine shipyard knows how to pick up and move the standard shipping container. Docker containers are welcome the same way in a wide variety of computing environments.

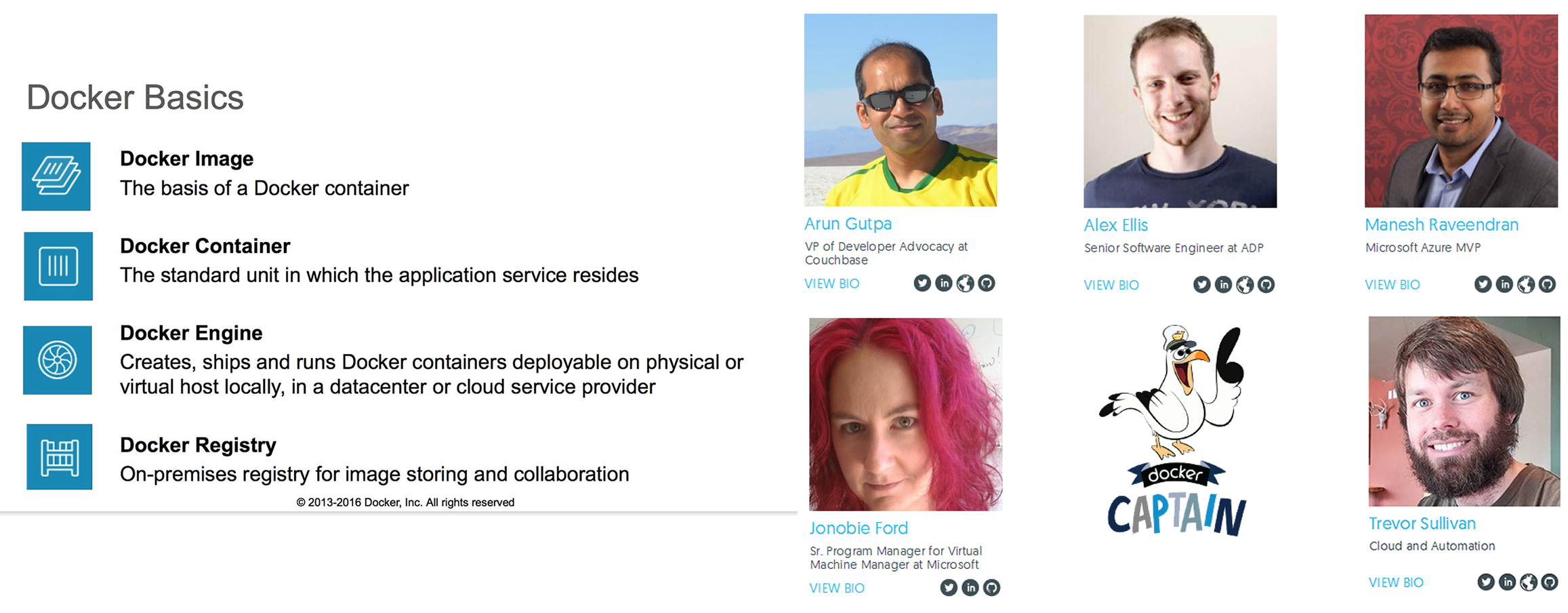

6. Docker’s ambassadors: the Captains

Have you met the Docker Captains yet? There’s over 67 of them right now and they are spread all over the world. Captains are Docker ambassadors (not Docker employees) and their genuine *love* of all things Docker has a huge impact on the community.

That can be blogging, writing books, speaking, running workshops, creating tutorials and classes, offering support in forums, or organizing and contributing to local events.

Here, you find out on how you can follow all the Captains without having to navigate through over 67 web pages.

The Docker Community offers you the Docker basics, and lots of different ways to engage with other Docker enthusiasts who share a passion for virtual containers, microservices and distributed applications.

Got a cool Docker hack? Looking to organize, host or sponsor Docker meetups? Want to share your Docker story?

Get involved with the Docker Community here.

7. Alex Ellis – Docker Captain

I became a Docker Captain after being nominated by a Docker Inc. employee who had seen some of my training materials and activity in the community helping local developers in Peterborough to understand containers and how they fit into this shifting landscape of technology. The engergy and enthusiasm of Docker’s team was what lead me to start this journey on the Captains’programme.

It’s all about raising up new leaders in the community to advocate the benefits of containers for software engineering. We also write and speak about exciting new features in the Docker eco-system and presence ourselves in conferences, meet-up groups and in the marketplace. Start my self-paced, Hands-On Docker tutorial here. If you have questions, or want to talk I’m on Twitter.

Thank you to Docker Captain Alex Ellis for co-authoring the introduction to this write-up and for providing feedback and technical insights on containers.

Be well, do good, and stay Plesky!

Cheers,

Jörg

Sources: Docker.com, Alex Ellis, Google Cloud Platform Blog, InformationWeek, Freecodecamp

Next post >> What’s new in Stack Overflow’s 2016 survey

3 Comments

Great integration, congratulations!!!

https://dreamcommerce.com/2016/11/25/introducing-plesk-onyx-whats-new-come/

Thank you for this useful article.

You only listed 5 essentials. You’re missing #5:

1. Containers are not VMs

2. A Container (Process) can start up in one-twentieth of a second

3. Containers have proven themselves on a massive scale

4. Containers are “lightweight”

6. Docker’s ambassadors: the Captains

As Roseanna Danna would say … “never mind”.

I found it. 5. Docker has become synonymous

It was to the right side of one of the images. It helps to read the entire article.